DocIE@XLLM25: In-Context Learning for Information Extraction using Fully Synthetic Demonstrations

Abstract

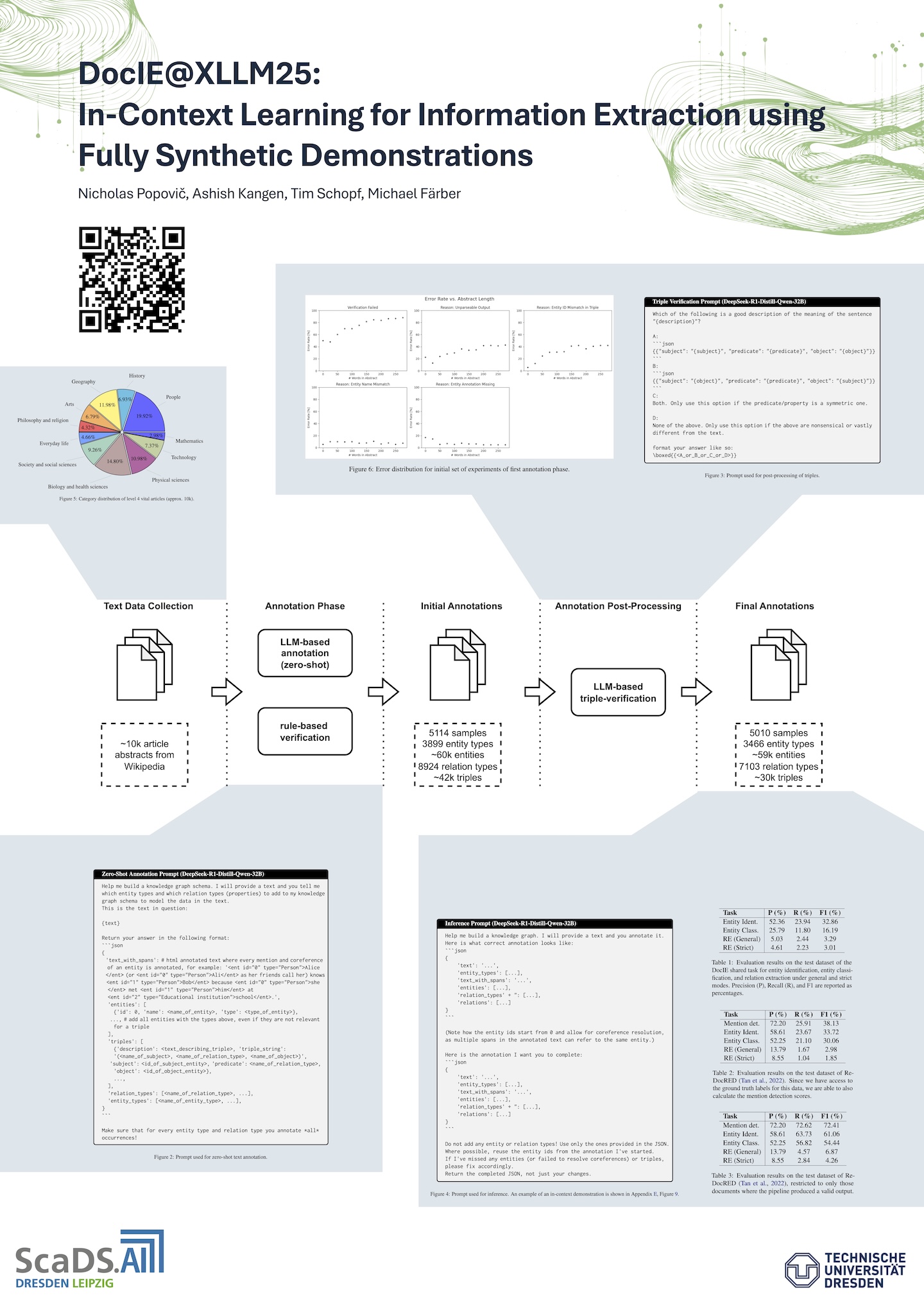

Large, high-quality annotated corpora remain scarce in document-level entity and relation extraction in zero-shot or few-shot settings.In this paper, we present a fully automatic, LLM-based pipeline for synthetic data generation and in-context learning for document-level entity and relation extraction.In contrast to existing approaches that rely on manually annotated demonstrations or direct zero-shot inference, our method combines synthetic data generation with retrieval-based in-context learning, using a reasoning-optimized language model.This allows us to build a high-quality demonstration database without manual annotation and to dynamically retrieve relevant examples at inference time.Based on our approach we produce a synthetic dataset of over 5k Wikipedia abstracts with approximately 59k entities and 30k relation triples.Finally, we evaluate in-context learning performance on the DocIE shared task, extracting entities and relations from long documents in a zero-shot setting.The code and synthetic dataset are made available for future research.

Video

Dataset explorer

Poster

DocIE Poster presented at XLLM25.

DocIE Poster presented at XLLM25.